When Justice Falls Silent

March 11, 2025

I have been writing and rewriting again and again for two weeks now, unable to voice the agony I feel as the proceedings against Armenian prisoners...

Read more →Hi! I'm Manana and you found my cozy and chaotic corner of the web. I am a builder, leader, a creative mind and an innovation geek. My path took me from the transformative years at UWC Dilijan international school to diving deep into Data Science and Economics at UC Berkeley, and later applying AI to brain signals data at Harvard's Kreiman Lab

These days, I'm a product manager at IBM Data & AI where I launched IBM Data Product Hub 0-1. At IBM, I've worn different hats - from taking patented tech to market to crafting data-driven strategies that help senior leaders make better decisions. Think of it as being a translator between innovation and real-world business impact.

When I'm not geeking out over data, AI and product launches, you'll find me throwing dinner parties that turn strangers into friends, making perfumes from scratch, playing piano, or dancing. I move through life in Armenian, English, and Russian (and I've spent four years studying German, but let's just say it's complicated).

My journey has been powered by scholarships - from UWC Davis to Google Generation, Scholar Mundi, AIWA, ARS, Luys, and My Step. I am deeply grateful for the people who created opportunities for me and thrive to be an enabler myself. To give back my community I co-founded DataPoint Armenia with a mission to provide resources for aspiring Armenian data scientists.

Every day, I try to mix technical skills with creative thinking to build something new. Because at the end of the day, that's what gets me excited - building and creating things that make a difference.

Using machine learning to classify human behaviors from brain signals in dynamic noisy environment...

The negative externalities of large-scale forest fires were shown to outweigh the benefits ...

This project explores the method for transfer learning proposed in “Fast Adaptation with Linearized ...

Motivated by increasing interest in cultural goods and their impact on economic output, this case study...

It all started in summer of 2019 at UC Berkeley's Moffitt Library. Berkeley wasn't just talking...

Nonwoven fabrics are a staple in single use products found in...

Decoding brain activity in relation to physiological behaviors can be the key to advancing medical practices to solve many health-related problems which are yet to be addressed. For example, understanding how and which brain parts correspond to certain eating behaviors can lead to successful medical therapies for curing eating disorders. Additionally, the ability to predict how and when the person wants to move and which type of movement they want to undertake from the neuronal activity can be translated into smart wearable devices - brain computer interfaces (BCI) - for giving mobility to those who lost their limbs. However, the successful application of any classification model in brain computer interfaces requires that the underlying research, and hence, the technology is robust to the challenges and the noise of the dynamic world. My research complies with the modern BCI demands of studying the brain in a dynamic uncontrolled environment making its results directly applicable to real-life problems and shows that the well-defined physiological behaviors can be classified with high AUC scores even with simpler ML models.

Dask, H5py, sklearn, TensorFlow, PyTorch, Jupyter Notebook, matplotlib, seaborn, MATLAB

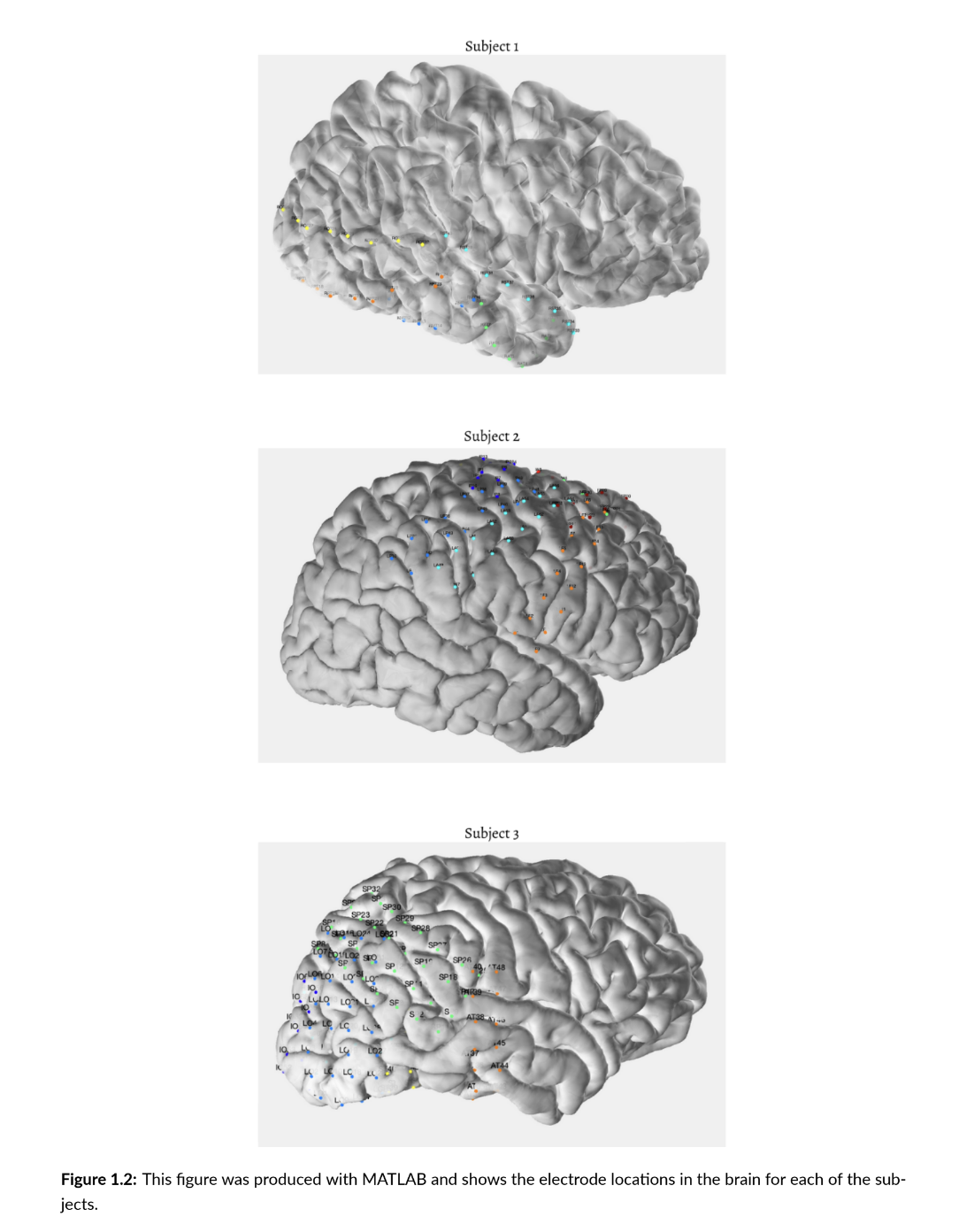

In this study I work with data received from three human subjects who underwent an electro- corticography procedure prior to surgery for severe epilepsy and had electrodes implanted on the surface of their brain for at least a day.

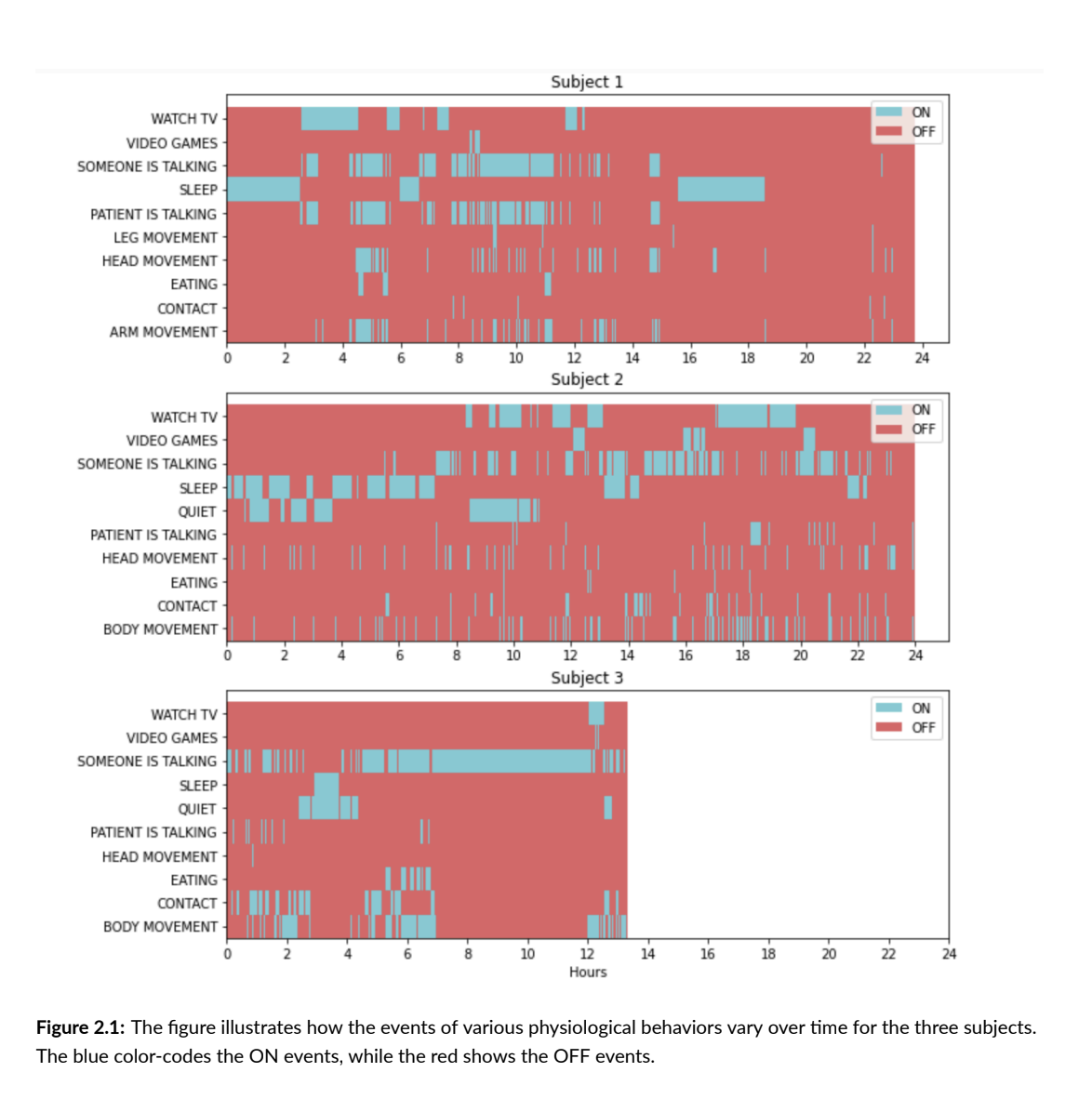

During this time the patients have also been video- and audio-recorded making it possible to know what they have been doing at any second. The knowledge of their physiological behaviors, such as Watching TV, Sleeping, Eating, Talking, Arm, Leg and Head Movements, during the time when their brain activity has been recorded makes it possible to formulate the challenge of this study as a supervised learning problem which is the framework used throughout the paper.

Even though I tried out many machine learning techniques in the initial research phase, an honorary mention being the deployment of the transformers architecture with a hypothesis to learn the inherent structure of the brain signals to uncover common patterns, the more interpretable, simpler models were favored towards the end of the research due to the transparency of which features were contributing to the classification success. The best results were achieved with the tree based machine learning models and the combination of time series and frequency features.

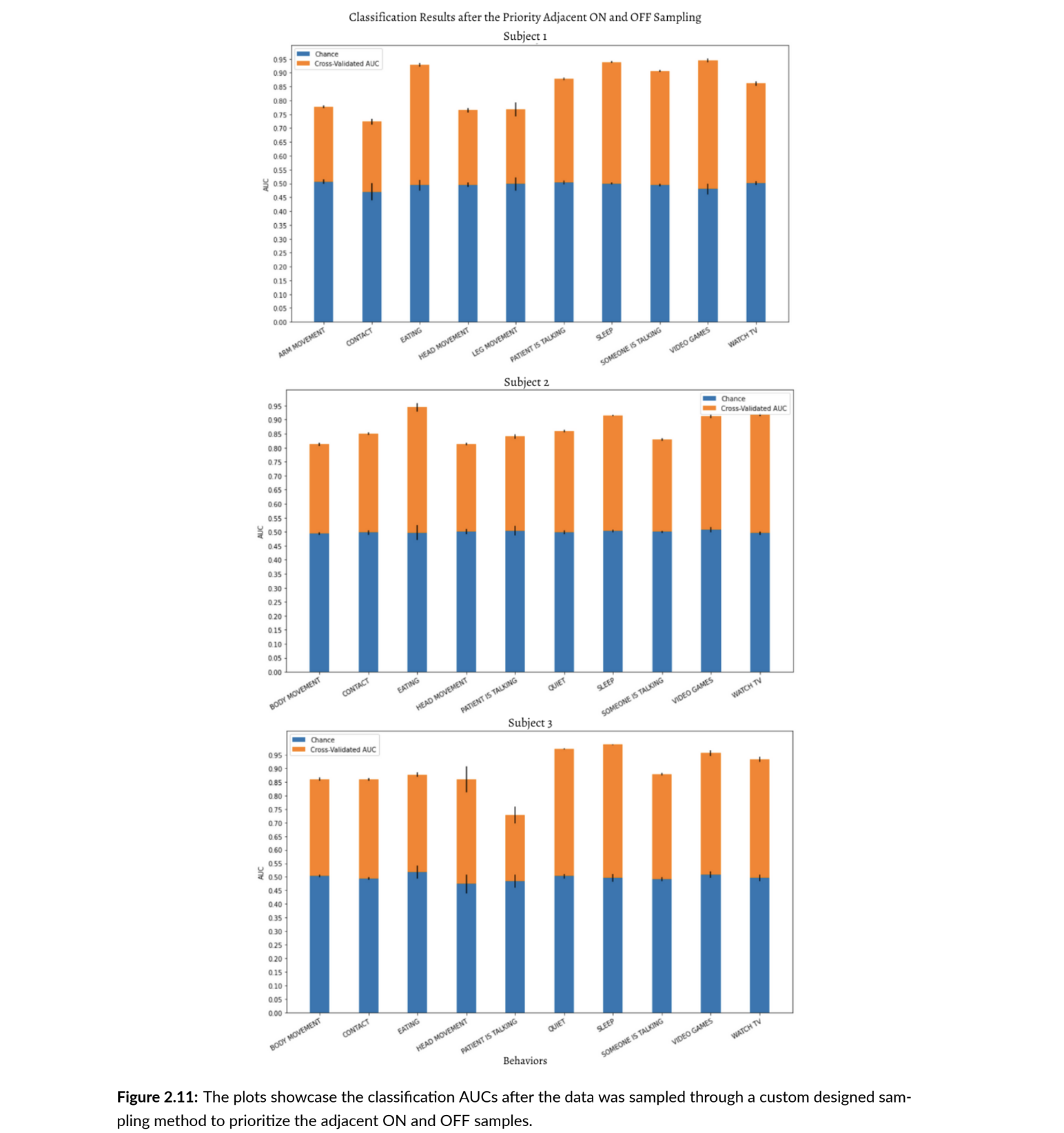

My results showed that it is possible to dynamically classify the continuous human physiological behaviors over time. The annotated behaviors can be divided into two groups: behaviors that are characterized with longer lasting events such as SLEEP, QUIET, SOMEONE IS TALKING and WATCH TV; and short but more frequent events such as the movement based behaviors of BODY MOVEMENT, LEG MOVEMENT, ARM MOVEMENT, HEAD MOVEMENT. Generally, the longer lasting events get decoded better since there is less noise in both annotation precisions and from the brain activity perspective these states are better defined since they last longer for our sampling frequency to capture their unique features. Some examples of these good performances are 0.90 and higher AUCs obtained while decoding SOMEONE IS TALKING and WATCH TV behaviors, 0.92 and higher AUCs for decoding SLEEP, 0.93 and higher AUCs for decoding QUIET. The movement based behaviors are usually around 0.9 AUCs even though for some cases such as BODY MOVEMENT for Subject 3 is decoded with 0.93 AUC. The two outliers that do not comply with the aforementioned discussed pattern are EATING and VIDEO GAMES behaviors. From the figure which shows the ON and OFF annotations for behaviors over the time (Figure 2.1), one can see that neither EATING, nor VIDEO GAMES happen often or last longer, and yet, they are decoded with the highest AUCs (0.97 and 0.95 respectively). Both behaviors require engaging multiple sensory receptors simultaneously creating rich sensory stimuli for the brain and a component of consciously acting on the input information. From the prior literature it is shown that the sensory stimuli, in fact, are linked and have high correlations with brain activity at certain brain regions which are responsible for the successful processing of this input information.

I have always had a deep fascination towards brain research, but I never thought I would have the opportunity to work on it. I am immensely grateful for the opportunity to have worked under Gabriel Kreiman’s supervision and learned from him and his stellar lab students. I am also grateful for Prof. Pavlos Protopapas for his continued support and guidance throughout my graduate studies. This research project was affiliated with Kreiman Lab, Harvard Medical School and Harvard IACS and was completed as part of my Master's thesis.

The negative externalities of large-scale forest fires were shown to outweigh the benefits of natural forest fires leading to worsened air quality, erosion, landslides and increased risk of respiratory and cardiovascular diseases. For decades the is- land of Madagascar has been struggling to manage its forest fires while receiving little attention from the international research community. The current fire management resources and prediction models are based on domain-specific, hand-crafted features which require a separate installment of sensors and adjustment to country-specific needs. These can turn out to be very costly and hard to adapt for the country. Our approach aims to create a cost-efficient, AI-driven approach for predicting forest fires in Madagascar a month in advance. We use existing open-source data from Google Earth Engine to train a neural network for fire detection. Our final model achieves 78% balanced accuracy and 83% fire detection accuracy (recall) outperforming our baseline model.

I had the pleasure to work with Jessica Edwards and Alexander Lin on this project. We were advised by Christopher Golden and Prof. Milind Tambe who teaches “AI for Social Impact” at Harvard University.

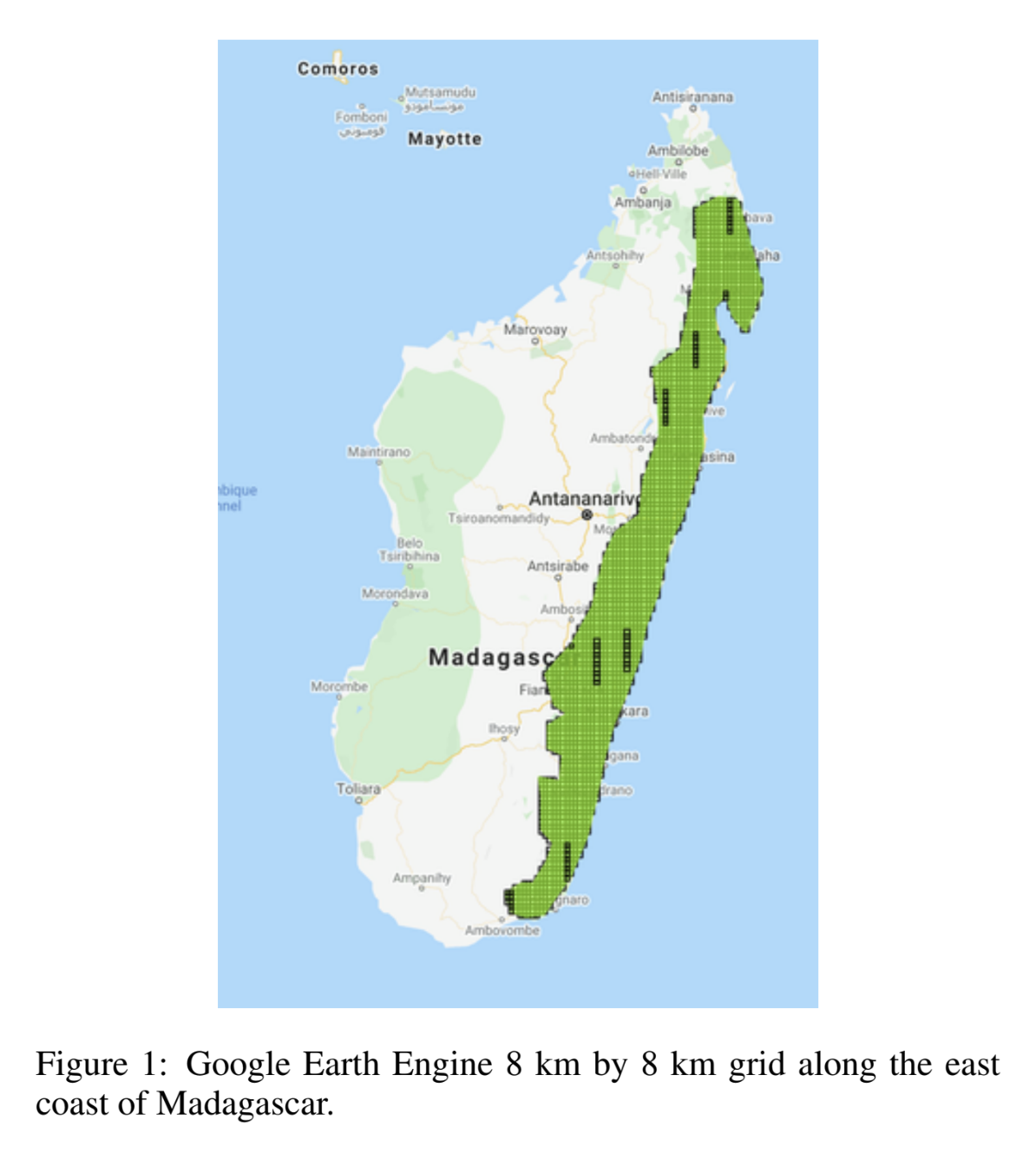

Landsat 7 (U.S. Geological Survey 2019) is part of the Land- sat program under NASA and U.S. Geological Survey and has an orbit period of 16 days. Satellite images from Land- sat 7 obtained through Google Earth Engine are used as the input source for our hotspot prediction model. In order to train our model, we needed to provide ground truth labels for training and evaluating our predictions. The FIRMS dataset is provided by NASA and used by several fire prediction systems. We used the Fire Information on Resource Management System (FIRMS) archive and preprocessed the data beginning from 2014 in order to predict the fire events roughly a month in advance. In order to do this, the data was matched with the Landsat 7 satellite image dataset with a 5-week lag for each 8 km by 8 km region.

PyTorch, Nvidia T4 Tensor Core GPU, FastAI, Hydra, TensorBoard

This project utilizes state-of-the-art machine learning models combined with Google Earth Engine’s imagery for creating a cost-effective flexible prediction algorithm for fires specifically in Madagascar. The training dataset is taken from Landsat 7 satellite and is further preprocessed to be in the form of histogram bins. It is important to note that the imagery is filtered according to a polygon bounding the entirety of forestland on Madagascar’s east coast, hence, narrowing down our predictions to areas most susceptible to forest fire. To be more precise with the predictions, we further divided the polygon into small rectangular regions and considered them as our units for location. The response variable is matched from the Fire Information for Resource Management System (FIRMS) database, which has an archive of all the fire events starting from 2012. Since our goal is to make a prediction model which will allow some time for fire management efforts, the events were matched with satellite imagery with a 5-weeks lag. We train a neural network that performs a binary classification task for identifying the fire events for each rectangular region a month in advance. The final model achieves 78% balanced and 83% fire prediction accuracy, exceeding the results of a baseline model. Balanced accuracy is chosen as a main metric of evaluation because of the existing class imbalance in the data. Our approach is the first to predict the fires in Madagascar one month in advance, potentially allowing time for fire management efforts.

In this project, we explore the method for transfer learning proposed in “Fast Adaptation with Linearized Neural Networks”, a 2021 paper by Maddox et al. using PyTorch code provided by the authors, as well as our own implementation developed in TensorFlow. Transfer learning, sometimes also called domain adaptation, refers to a growing number of techniques that enable machine learning models trained on one (source) task to be repurposed for a different but related (target) task. In the context of deep learning, transfer learning aims to adapt the inductive biases of trained deep neural networks (DNNs) to new settings and has particularly compelling advantages: many state-of-the-art (SOTA) DNNs for language modeling and computer vision have billions of parameters and require immense computational resources that pose high financial and environmental costs. Rather than train new models from scratch, we can leverage pretrained models and transfer learning to cut costs and save resources.A common existing approach to transfer learning in DNNs is to fine-tune the network, but this is computationally expensive and often leads to locally optimal solutions. Fine-tuning only the last layer speeds up computation significantly, but has limited flexibility and is often unable to learn the full complexity of new tasks. The methods proposed in this paper aim to address the limitations of using DNN finetuning for transfer learning. In particular, the authors claim that their method is fast and remains competitive with computationally expensive methods that adapt the full neural network. Because their method is built using GPs, the authors also claim that it comes with good uncertainty estimates.

For this project I worked with awesome teammates: Dashiell Young-Saver, Morris Reeves and Blake Bullwinkel. We had a lot of fun going to the deep ends of AI research. We are grateful for Prof. Weiwei Pan’s guidance and support throughout the project.

PyTorch, TensorFlow, Jupyter Noteebooks

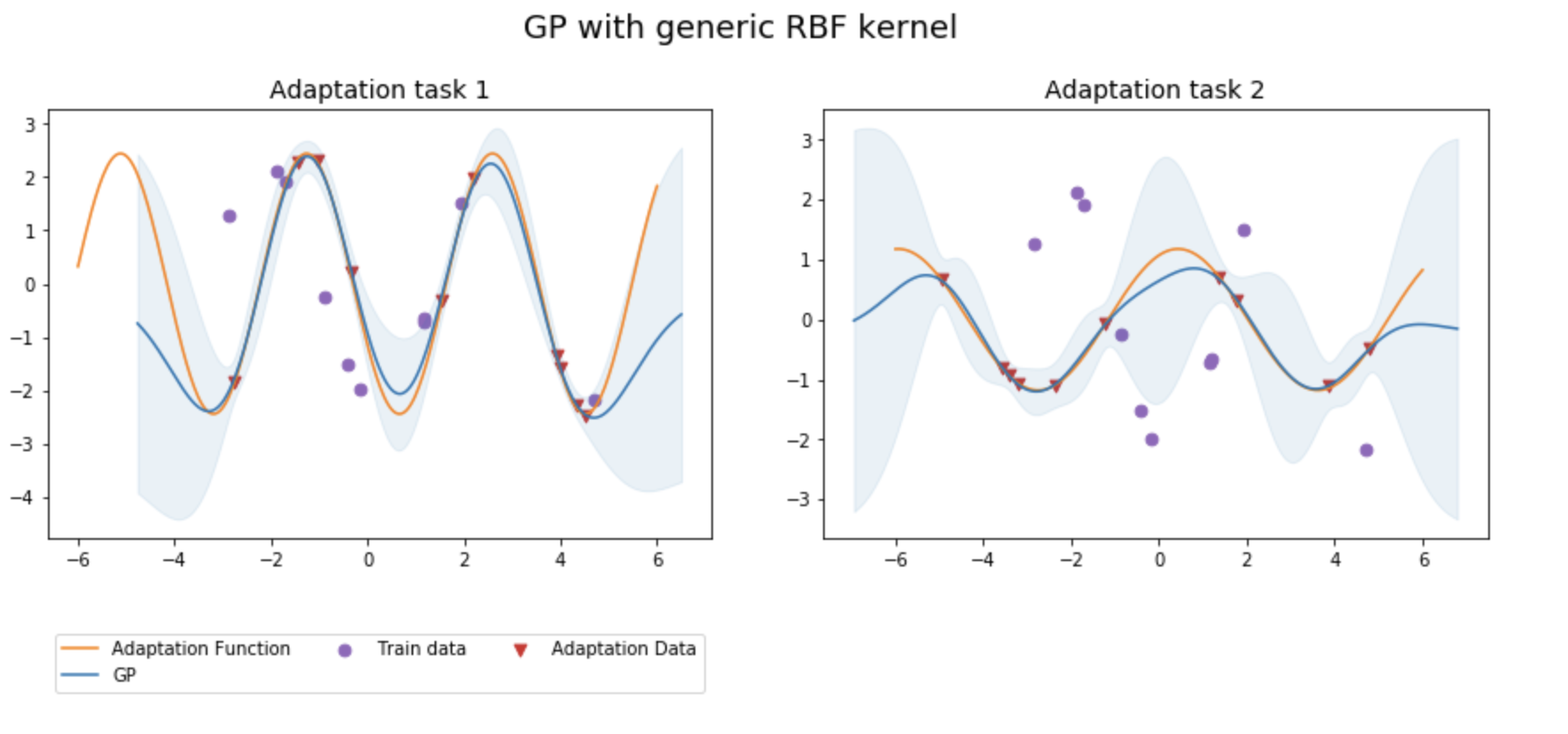

Embedding the Jacobian of a neural network trained on a source task into a GP is a compelling idea for transfer learning. Our primary question is: how does this approach compare to using GPs with alternative kernels? To answer this question, we compare the predictive performance of the finite NTK on target tasks to that of two alternatives: 1) embedding the Jacobian of an untrained neural network into the GP kernel, and 2) using a generic RBF kernel. As the only distinction between the three methods is where the GP kernel comes from, this comparison will allow us to determine when embedding the Jacobian of a trained neural network into a GP offers advantages over using GPs in a more traditional ways, and when it does not. In order to understand the conditions under which the finite NTK is useful, we compare its performance with the two alternative methods on a variety of transfer learning tasks. Using both toy sinusoidal data and real-world electricity consumption data, we vary the complexity of each task (as measured by the difference between the source and target tasks), as well as the number of adaptation points used during GP inference. The paper also claims that as a GP-based approach, their method comes with good uncertainty estimates. As a secondary objective, we hope to evaluate the quality of these estimates with the help of toy data sets, which offer clear visual evidence of the quality of epistemic uncertainty estimates.

We compared the predictive performance of the proposed finite NTK method to GPs with alternative kernels. In our experiments, we found that GPs with RBF kernels consistently outperformed the proposed method, suggesting that the inductive biases learned by the trained neural network and adapted into a GP hindered predictive performance. However, the relatively strong performance of RBF kernel GPs could simply be the result of conducting overly simplistic experiments that would not benefit from transfer learning to begin with. Alternatively, it could be that our experiments provide too much adaptation data for the benefits of transfer learning via the finite NTK method to become apparent. Hence, future work could compare the performance of these methods for scenarios in which the adaptation task is: 1) significantly more complex, and 2) particularly data scarce. For experiments in which the benefits of transfer learning may be more apparent, we would also recommend comparing the predictive performance and computational efficiency of the finite NTK with those of traditional methods for transfer learning, including neural network finetuning. Further, it would also be interesting to investigate the effect of different neural network architectures on the performance of the proposed method.

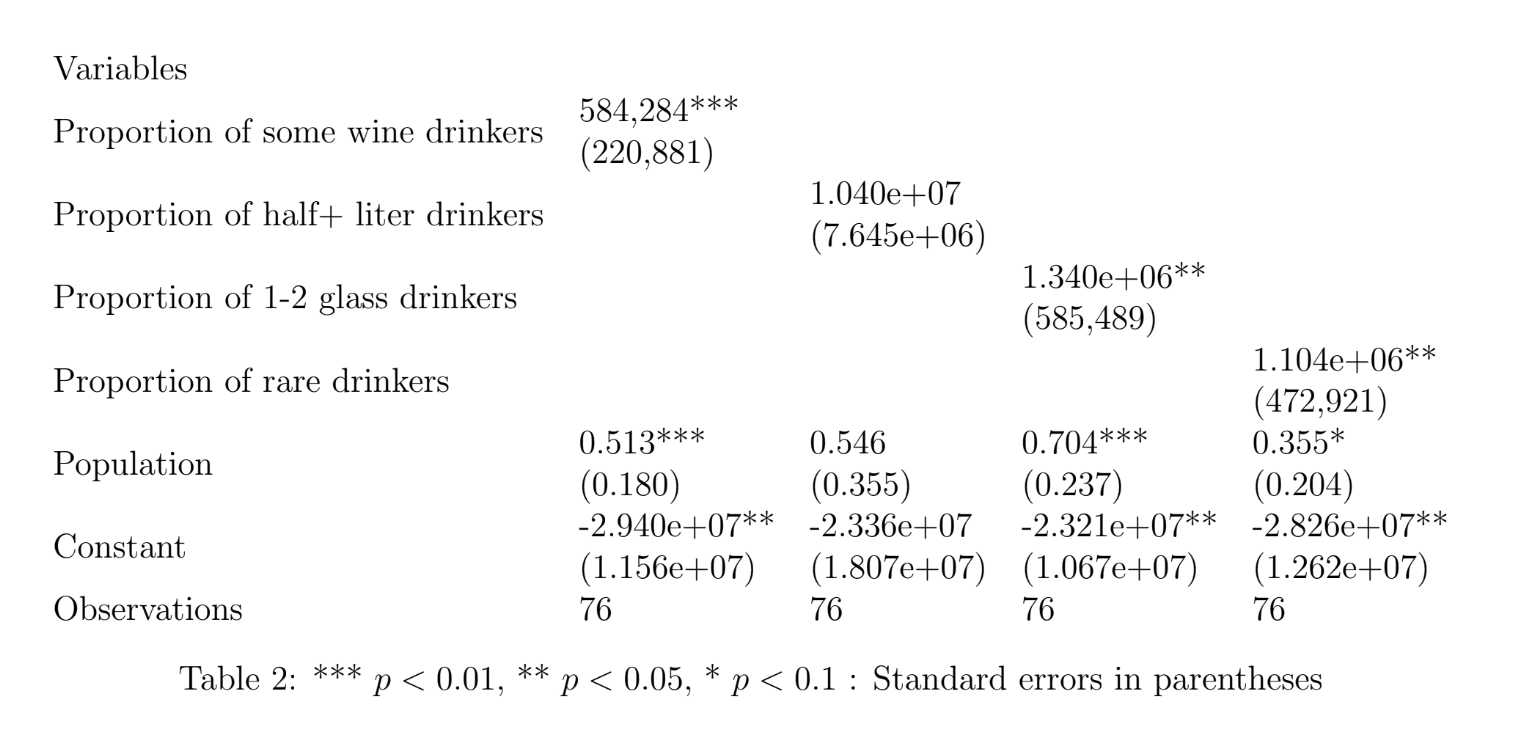

Motivated by increasing interest in cultural goods and their impact on economic output, this case study aims to use regional variation to establish a significant relationship between drinking habits and wine production in 20 regions of Italy. We use yearly wine and grape production, as well as different features of drinking habits for each year since 2013 to document that regions with a high proportion of regular consumers of wine have significantly higher wine output, explained exactly by these drinking habits of the population. To account for possible endogeneity of this hypothetical supply-demand chain, we adopt an instrumental variable approach where wine reviews on popular sommelier website Vivino serve as our instrument. This instrument differences out endogeneity through the demand side, and through establishing a significant relationship, strengthens our conjecture of culturally conditioned economic output. This instrument captures insightful information about the demand shock of the first and fourth specifications of drinking habits defined in the paper - proportion of people who drink some wine on a daily basis and proportion of people who drink wine rarely. We document a significant relationship of wine culture within the society on the economic portfolio of wine output.

I have co-authored this paper with my good friend Gevorg Khandamiryan (PhD Economics at UC Berkeley). We thank Professor Eichengreen and Todd Messer for valuable feedback and support throughout the research process.

Wine economics offers multiple insights on how the culture can shape the economic output of a certain territory. It is, in fact, within the flow of rising interest in behavioral economics and game theory that culture and traditions can alter the consumption patterns of a typical “territorial good.” This study aims to give historical background for the wine industry in Italy and possible explanations of its development in the late 20 and 21 century, as well as to lay theoretical background of certain changes in supply levels, demand shocks with a possible establishment of causation between supply and “traditional drinking habits.” Does the existing lifestyle and the increasing casual discussions about wine drive more consumption and hence, more production of wine? Is it the position of wine in the center of family social contexts or family traditions that leads these regions to specialize in the production of this drink? Is it the culture revolving around wine that engraves and predetermines economic portfolio? Our answer is, yes. The hypothesis of this study claims that culture shapes the economy: historically fixed habits produce a significant impact on wine output level.

Our sample granularity comprises 13 years and 20 regions - 260 rows in total for wine production and drinking habits. The time period covers the years starting from 2006 till 2018 for 20 different municipal regions of Italy - Piemonte, Valle d’Aosta, Liguria, Lombardia, Trentino-Alto Adige, Veneto, Friuli-Venezia Giulia, Emilia-Romagna, Toscania, Umbria, Marche, Lazio, Abruzzo, Molise, Campania, Puglia, Basilicata, Calabria, Sicilia, Sardegna. The main (response) variable is the total wine production in hectoliters, while the dependent variable of interest is the drinking habit. The data source is the Italian National Institute of Statistics.

Because of a possible simultaneous causality in these hypothetical supply and demand variables, we use instrumental variables (IV method of causality) to isolate the movements in the demand side that are uncorrelated with error terms. We scrape wine reviews from a sommelier website Vivino and use natural language processing tools to quantify the sentiment and the polarity of the reviews. Hypothesizing that wine is a socio-cultural good we believe that the opinions and the general public discussion about wine can incentivize or disincentivize the consumption of wine and if it has any connection with wine production then the channel through consumption is the only one. Establishing that wine is an inseparable part of people’s lifestyle and daily choices in Italy, we believe that having wine reviews/recommendations as our instrument can give us a strong first-stage. This is our main strument and we use it in the main model to account for recommendation-based exogenous demand shocks.

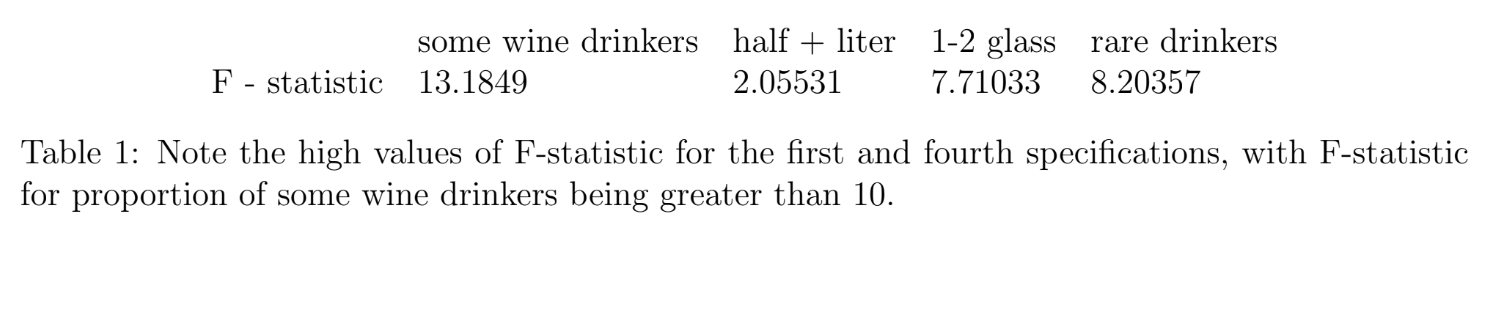

Our main findings indicate that in order to establish successful causality we needed to further sub-segment the drinking culture in Italy. The loyal wine-lovers, who drink 1-2 glasses every day have been showing sticky behavior - not being affected by our instrument of public opinions. Although these agents are core representatives of wine culture, their proportion in the whole population is relatively small, and thus, changes in these segments will lead to less impact in the wine industry. The most insightful place to look for explanations is the proportion of some wine drinkers and rare wine consumers, because it is the behavior of these categories that is mostly affected by our instrument and delineates major and large-scale changes in consumption pattern (as these categories encompass around 60 percent of population). Hence, testing for the instrument's relevance, we got the strongest instrument specifically for some wine and rare drinkers, as shown in the table below.

It all started in summer of 2019 at UC Berkeley's Moffitt Library. Berkeley wasn't just talking about data science and AI - it was obsessed with it. "Big data", "machine learning" and "data science" weren't just buzzwords, they were part of everyday conversations you'd hear in restaurants and cafes. I was a teaching assistant for "Data Science 100", a course that had grown so popular it was teaching 1,200 students every semester. Looking back, it's no wonder I thought the whole world was as caught up in the data science revolution as we were.

My visit to Armenia that summer hit me like a bucket of cold water. Every time I told someone I was studying "Data Science and Machine Learning", I got the same confused looks. My aunts and uncles would ask me, with genuine concern, "So, how would you use your degree to earn for life?" The gap was clear - while Berkeley was racing ahead in the data science revolution, Armenia was still at the starting line, not just in terms of technology but in seeing its potential.Coming back to campus, I found friends who'd had the same eye-opening experiences in their home countries, and we knew we had to do something about it. That "something" became DataPoint Armenia - our non-profit organization dedicated to bringing the data revolution to Armenia.

I co-founded DataPoint Armenia with a group of dear friends and talented humans: Armen Hovanissian, Taline Mardirossian, Michael Abassian, Lilit Petrosyan, Gary Vartanian and Gevorg Khandamiryan. I am grateful to each of them for their contributions and passion for the cause. Currently, I lead the organization with Armen, Taline, Michael and Lilit.

DataPoint Armenia grew in ways we never expected. We started simple - creating buzz around data science and getting people excited about its possibilities. Our social media was filled with data visualizations and stories about how data science could transform Armenia's economy. The response was incredible - soon we had over 70 young, ambitious data scientists volunteering their time on projects ranging from economics to healthcare, fighting social media misinformation, and even developing natural language processing for the Armenian language.

As we grew, we knew we needed to create something more structured to really make an impact. That's how our K-Minds summer career accelerator program was born. It's not your typical internship - we connect aspiring data scientists with real companies facing real challenges, and pair them with experienced mentors who know the field inside and out. We've been running this program for three years now, and it's becoming a launchpad for Armenia's next generation of data professionals.

As the world becomes more and more environmentally conscious, we witness the rise of green consumerism in all of our surrounding industries including beauty and cosmetics. Although eco-friendly approaches are gaining popularity in the beauty and cosmetics industry, its production is heavily dependent on single use products and there is still a long way to go before hitting major milestones such as zero-waste production and consumption.

Nonwoven fabrics are a staple in single use products found in our everyday lives like disposable medical masks, disposable wipes, and bags for agricultural seeds. These nonwoven fabrics are cost effective and easy to manufacture but also mostly non-biodegradable, breaking down into microplastics that severely damage the environment. Our technology combines a patented manufacturing process, focused rotary jet spinning (fRJS2 ), and a process of spinning naturally sourced biopolymer, pullulan, with bioactive ingredients into water soluble nanofibers. Our technology could replace nonwoven fabrics with completely biodegradable pullulan nanofibers.

We explore two routes with which to commercialize this technology, a direct-to-consumer route, and a business-to-business route. In the direct-to-consumer approach we recommend building a strong consumer brand that brings the values of sustainability, customization, and personalization to Gen Z consumers. In the business-to-business approach we recommend establishing a partnership with L’Oréal, the largest global cosmetics company, to supply them with sustainable products in their fastest growing market segment, active cosmetics. Each business plan is our recommended path for each scenario, direct to consumer, or business to business and we encourage the lab to choose the optimal scenario for themselves and then follow our recommended business plan for the appropriate market.

This business plan is crafted in affiliation with Harvard Business School’s “Lab to Market”course by James Bui, Sean Kim, Ally Neenan and myself. The technology is developed and patented by the Disease Biophysics Group at Harvard University.

While I cannot release our detailed business plan with the developed and validated use cases as it was done for the Disease Biophysics Group to be utilized, I can confidently say that lab-to-market / research commercialization is one of my favorite work areas. Lab to market product management bridges two vastly different worlds - the methodical, discovery-focused environment of research and development, and the fast-paced, customer-centric world of commercial markets.

What makes this work particularly exciting is how it demands both creative and analytical thinking. One day you're deep in technical discussions with researchers, understanding the nuances of their breakthrough, and the next you're mapping out market opportunities or crafting value propositions that will resonate with potential customers. It's like being a translator between these two worlds, but also an architect who needs to build the bridge between them.

The challenge of taking something from a laboratory bench to a viable product feels like solving a complex puzzle where every piece matters - the technical feasibility, market fit, scalability, and regulatory requirements all need to align. But when they do, the impact can be transformative. You're not just launching another product; you're introducing innovations that could fundamentally change how things are done in an industry or solve problems in ways people hadn't imagined possible. What I find most fulfilling is that this role makes perfect use of the ability to understand both technical depth and business breadth. You need to dive deep enough into the science to grasp its potential while maintaining the business acumen to spot real market opportunities. It's about being curious enough to ask both "how does this work?" and "who needs this and why?" The process of answering these questions and charting a path forward is where innovation truly comes alive.

March 11, 2025

I have been writing and rewriting again and again for two weeks now, unable to voice the agony I feel as the proceedings against Armenian prisoners...

Read more →March 11, 2025

I have been writing and rewriting again and again for two weeks now, unable to voice the agony I feel as the proceedings against Armenian prisoners take place in Baku - a carefully orchestrated process that challenges what we thought remained of our collective humanity. Ruben has been on a hunger strike for 20 days now, protesting the injustice he faces, and today when I saw his picture, I simply broke down. I mean, how could I not? I met him when I was 16 at UWC Dilijan, the day when he and Veronika first stepped into the new building of the school and shared their dream with us - a vision that would transform so many lives, including mine. The Ruben that I know is a force of positive change, a visionary, a catalyst - a person who knows how to dream.

That picture from today was the portrait of justice fallen silent.

For context: Ruben Vardanyan is a prominent entrepreneur and philanthropist who founded my high school UWC Dilijan, Aurora Humanitarian Initiative, and many other development projects in Armenia. In 2022, he moved to Nagorno-Karabakh to be with fellow Armenians facing the extreme hardships of hunger and post-war effects and became the State Minister the same year. He was detained by Azerbaijani authorities on September 27, 2023, amid the mass exodus of ethnic Armenians following Azerbaijan's military takeover. He has been held in detention since and is reportedly facing multiple charges, including "financing terrorism" and "creating illegal armed groups." At this point, we are aware of numerous violations of his human rights, including the right to a fair trial. He has spent most of his detention in solitary confinement and punishment cells, deprived of basic hygiene, while facing pressure to sign backdated falsified documents and being given only one month to familiarize himself and his defense with a vast cache of case materials in Azeri, a language he does not understand.

Deep down, I know that my inability to write was due to my feeling of powerlessness. I stopped believing that social media claims and posts help - the mere awareness they create seems to bring no action. It is ironic because through my formative years at UWC Dilijan, Berkeley, and Harvard, I had been surrounded by people who shared my morals, aspirations, and motivations to make this world a better place, no matter how cliché it sounds. In those spaces, I truly believed that we could make a difference and improve this world. Now I am looking around with my bubble burst, pleas gone unanswered, and simply disappointed. Where are all the people I looked up to? What can we do? Where did we go wrong? I question the effectiveness of our international institutions. What good are human rights declarations if they cannot be enforced? What purpose do international courts serve if they cannot protect individuals like Ruben from sham trials and inhumane detention conditions?

I question myself too. What more could I be doing?

And yet, I want to write today not just in despair but in defiance—carrying forward the dream of peaceful world united by education that Ruben and his wife shared with us that day at UWC Dilijan. I hold to a sliver of hope and light that Ruben and the Armenian prisoners will return to their families because the hardest battles are given to the strongest people and the resilience is in our DNA. I write as one voice among many, a chorus that grows louder with each passing day. I write because somewhere in a cell in Baku, a man who taught me to dream of a better world is refusing to surrender that dream, even now.